How Scene Graphs Transform Images into Rich Visual Narratives

The intersection of scene graphs, language models, and computer vision for smarter image comprehension

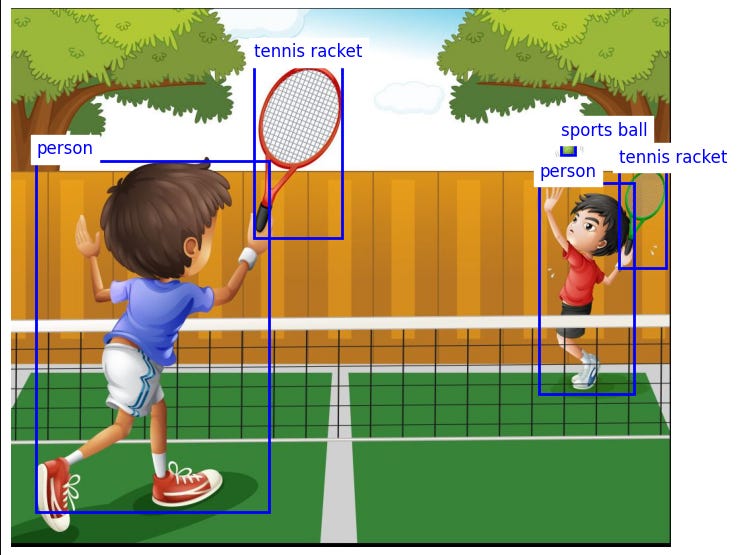

To truly understand an image, inferring the relationships between the objects within the image is crucial for effectively telling a "visual story." Scene graphs allow us to map out how objects within an image interact with one another. In this article, I will walk you through on how to build a visual story of the image given below using YOLOv5 for object detection and NetworkX for relationship extraction, visualizing these interactions to create a meaningful image representation. We will also use an LLM to describe the Graph’s output.

In rthe above image, we see two young boys engaged in a tennis match on a court. The scene captures a dynamic moment where both players are actively involved in the game. The boy on the left, dressed in blue, is positioned near the net with his racket raised (much like Roger Federer during his peak), indicating he is likely preparing to hit the ball. His posture suggests he is about to swing, adding a sense of motion to the image. On the right, the second boy, dressed in red, appears to be in a defensive position, preparing to receive the ball, which is visible in the air above his head.

The setting includes a tennis court with a net in the center, separating the two players, and a wooden fence in the background, with trees on either side. The image captures the energy of the game, with the players seemingly mid-action, suggesting a fast-paced exchange. Now, if we need to build a relationship model which provides an explainable AI scene for this match, we need to captures the positions and interactions of the players and the ball.

In this case, we combine object detection with relationship extraction to produce a comprehensive scene graph of a tennis match between two players. This can be done via Yolo5 which captures the bounding boxes of the objects and NetworkX to capture the relationships. After that, we can hook-up the entire scene with an LLM to get the Explainability.

Object Detection Using YOLOv5

We begin by detecting the main objects within the tennis match image using the pre-trained YOLOv5 model. YOLOv5's architecture allows for the real-time identification of objects such as people, tennis rackets, and the ball. The results are shown in the form of bounding boxes that localize each object in the image. This process involves passing the image through the YOLOv5 model, which outputs the detected objects and their respective bounding box coordinates.

The primary objects detected in this image are:

Person 1: The player on the left side of the court.

Person 2: The player on the right side.

Tennis racket: Held by both players.

Sports ball: Positioned near the center of the court.

Tennis court and net.

Code for Object Detection:

import torch

import cv2

import numpy as np

import matplotlib.pyplot as plt

import networkx as nx

from matplotlib.patches import Rectangle

from PIL import Image

import math

# Step 1: Load the pre-trained YOLOv5 model

model = torch.hub.load('ultralytics/yolov5', 'yolov5s', pretrained=True)

# Step 2: Detect objects in the image using YOLOv5 Vision model

def detect_objects(image_path: str):

img = Image.open(image_path)

results = model(img)

detected_objects = []

for *box, conf, cls in results.xyxy[0]: # bbox format: (x1, y1, x2, y2)

x1, y1, x2, y2 = map(int, box)

label = results.names[int(cls)]

detected_objects.append((label, x1, y1, x2 - x1, y2 - y1)) # label, x, y, width, height

return detected_objects

def calculate_distance(obj1, obj2):

x1, y1 = obj1[1], obj1[2] # first obj ka top left

x2, y2 = obj2[1], obj2[2] # second object ka top let

return math.sqrt((x2 - x1)**2 + (y2 - y1)**2)

# Step 3: Enhanced Relationship and Attribute Extraction

def detect_relationships(objects):

relationships = []

player1 = next((obj for obj in objects if obj[0] == "person" and obj[1] < 250), None)

player2 = next((obj for obj in objects if obj[0] == "person" and obj[1] >= 250), None)

sports_ball = next((obj for obj in objects if obj[0] == "sports ball"), None)

net = next((obj for obj in objects if obj[0] == "net"), None)

# Add spatial relationships between the ball and players

if player1 and player2 and sports_ball:

dist1 = calculate_distance(player1, sports_ball)

dist2 = calculate_distance(player2, sports_ball)

if dist1 < dist2:

relationships.append(("sports ball", "closer to", "Person 1 (server)"))

relationships.append(("sports ball", "farther from", "Person 2 (receiver)"))

else:

relationships.append(("sports ball", "closer to", "Person 2 (receiver)"))

relationships.append(("sports ball", "farther from", "Person 1 (server)"))

relationships.append(("Person 1 (server)", "about to serve", "sports ball"))

relationships.append(("Person 2 (receiver)", "waiting to receive", "sports ball"))

if net:

relationships.append(("Person 1 (server)", "near", "net"))

relationships.append(("Person 2 (receiver)", "near", "net"))

relationships.append(("sports ball", "above", "net"))

for obj1 in objects:

for obj2 in objects:

if obj1[0] == "person" and obj2[0] == "tennis racket":

relationships.append((obj1[0], "holding", obj2[0]))

if obj1[0] == "person" and obj2[0] == "tennis court":

relationships.append((obj1[0], "on", obj2[0]))

if player1 and player2:

relationships.append(("Person 1 (server)", "playing against", "Person 2 (receiver)"))

return relationships

def add_object_attributes(G, object_name, attributes):

for attribute in attributes:

G.add_node(attribute, color="green")

G.add_edge(object_name, attribute, relationship="has")

def add_relationships_with_details(G, objects, relationships):

"""Simulate relationships"""

for obj in objects:

G.add_node(obj[0], color="red")

if obj[0] == "person":

add_object_attributes(G, obj[0], ["wearing shorts", "wearing sneakers", "holding racket"])

if obj[0] == "sports ball":

add_object_attributes(G, obj[0], ["round", "near court"])

for subj, predicate, obj in relationships:

G.add_edge(subj, obj, label=predicate)

# Step 4: Plot the detailed scene graph

def plot_detailed_scene_graph(image_path: str, objects, relationships):

image = Image.open(image_path)

image = np.array(image)

plt.figure(figsize=(10, 7))

ax = plt.gca()

ax.imshow(image)

for (label, x, y, w, h) in objects:

rect = Rectangle((x, y), w, h, linewidth=2, edgecolor='blue', facecolor='none')

ax.add_patch(rect)

plt.text(x, y - 10, label, color='blue', fontsize=12, backgroundcolor='white')

plt.axis('off')

plt.show()

# Step 5: Use the uploaded image

image_path = '/content/two boys.JPG'

# Step 6: Detect objects and relationships in the image

objects = detect_objects(image_path)

relationships = detect_relationships(objects)

# Step 7: yahan pe scene plot

plot_detailed_scene_graph(image_path, objects, relationships)

Relationship Extraction and Visualization

We employed NetworkX to visualize the scene graph, representing each object and its relationships. The graph not only shows which objects are interacting but also the nature of these interactions.

The scene graph provides a structured, visual interpretation of the tennis match.

Person 1 and Person 2 are connected by the relationship "aiming towards", indicating that Person 1 is preparing to hit the ball towards Person 2.

The ball is connected to Person 1 by the relationship "about to hit".

The ball is also connected to the net with the relationship "above", demonstrating the ball’s current position in the game.

In this visualization, nodes representing people are shown in orange, objects such as the racket and ball in gray, and the environment (i.e., tennis court and net) in green. Edges between these nodes represent the interactions, and each relationship is labeled with its respective weight, indicating the strength or significance of the interaction.

Plotting a Scene Graph

import networkx as nx

import matplotlib.pyplot as plt

import numpy as np

# Simulate relationships

def add_relationships():

G = nx.DiGraph()

# Add nodes

G.add_node("Person 1", color="red")

G.add_node("Person 2", color="red")

G.add_node("ball", color="blue")

G.add_node("Racket", color="blue")

G.add_node("Tennis court", color="green")

G.add_node("net", color="green")

# Add edges (relationships) with weights

G.add_edge("Person 1", "ball", label="about to hit", weight=3.0)

G.add_edge("Person 2", "ball", label="waiting to receive", weight=2.5)

G.add_edge("Person 1", "Racket", label="holding", weight=4.0)

G.add_edge("Person 1", "Tennis court", label="on", weight=1.0)

G.add_edge("Person 2", "Tennis court", label="on", weight=1.0)

G.add_edge("Person 1", "net", label="near", weight=1.5)

G.add_edge("Person 2", "net", label="near", weight=1.5)

G.add_edge("ball", "net", label="above", weight=2.0)

G.add_edge("Person 1", "Person 2", label="aiming towards", weight=3.5)

G.add_edge("Person 2", "ball", label="preparing to swing", weight=3.0)

return G

def plot_circular_scene_graph(G, center_node, node_colors):

edge_nodes = set(G) - {center_node}

pos = nx.circular_layout(G.subgraph(edge_nodes))

pos[center_node] = np.array([0, 0])

plt.figure(figsize=(10, 10),dpi=300)

node_color_values = [node_colors.get(node, "lightblue") for node in G.nodes()]

nx.draw(G, pos, with_labels=True, node_color=node_color_values, node_size=2000, font_size=12, font_weight='bold', arrows=True)

edge_labels = nx.get_edge_attributes(G, 'label')

edge_weights = nx.get_edge_attributes(G, 'weight')

combined_labels = {key: f"{edge_labels[key]} ({edge_weights[key]:.1f})" for key in edge_labels}

nx.draw_networkx_edge_labels(G, pos, edge_labels=combined_labels, font_color='gray')

plt.show()

node_colors = {

"Person 1": "orange",

"Person 2": "orange",

"ball": "gray",

"Racket": "gray",

"Tennis court": "green",

"net": "green"

}

G = add_relationships()

plot_circular_scene_graph(G, center_node="ball", node_colors=node_colors)

Applications of Scene Graphs

The application of scene graphs extends beyond sports imagery. In fields such as robotics and autonomous vehicles, understanding the relationships between objects is crucial for decision-making. Scene graphs can also enhance image retrieval systems by allowing searches not just for objects, but for specific interactions between those objects (e.g., "a person hitting a ball").

Additionally, scene graphs offer benefits in understanding complex environments. For instance, in the context of smart cities, scene graphs could help manage traffic by modeling how vehicles interact with traffic signals, pedestrians, and each other.

The combination of object detection and relationship extraction via Scene Graphs has a wide range of applications:

Robotics: Scene graphs can help robots understand their environment, making decisions based on object-object interactions. For example, a robot could navigate a room by recognizing that a chair is in front of a table.

Autonomous Vehicles: In autonomous driving, scene graphs can help vehicles understand traffic scenarios by recognizing not just objects (like other cars or pedestrians) but also their spatial and behavioral relationships (e.g., car A is turning left, pedestrian is crossing the street).

Image Retrieval: Imagine searching for images based on relationships, not just objects. Instead of simply searching for "tennis", you could search for "person hitting ball on tennis court" — a much more specific and accurate search query powered by scene graphs.

Now Hookup Everything with an LLM to generate a Story

G = add_relationships()

scene_graph_image_path = "scene_graph.png"

plot_and_save_scene_graph(G, center_node="ball", node_colors=node_colors, filename=scene_graph_image_path)

# Step 2: Load the CLIP model to process the scene graph image

clip_model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32")

clip_processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32")

# Load the saved scene graph image

image = Image.open(scene_graph_image_path)

# Step 3: Preprocess the image

inputs = clip_processor(images=image, return_tensors="pt")

# Step 4: Pass the image through CLIP to get image embeddings (use get_image_features() instead of get_text_features())

outputs = clip_model.get_image_features(**inputs)

image_text_description = "Extracted visual relationships from the scene graph created for a tennis match."

# Step 5: Use LangChain's ChatOpenAI with GPT-4

llm = ChatOpenAI(model="gpt-4o-mini", temperature=0.7)

prompt_template = PromptTemplate(

input_variables=["description"],

template="Here is a description of a tennis match: {description}. Write a detailed story about what is happening."

)

chain = LLMChain(llm=llm, prompt=prompt_template)

# Generate the story based on the extracted relationships

story = chain.run(description=image_text_description)Generated Story of the Input Image

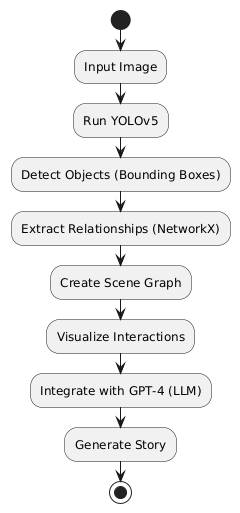

How it Works: A Quick Recap:

Input Image: Represents the step where the image is input into the system.

Run YOLOv5: The object detection process using YOLOv5.

Detect Objects: The bounding box detection of objects in the image.

Extract Relationships: Using NetworkX to determine the relationships between detected objects.

Create Scene Graph: Build the scene graph representing the relationships.

Visualize Interactions: The interactions between objects visualized in the graph.

Integrate with GPT-4: Pass the visual relationships to a large language model (GPT-4) for storytelling.

Generate Story: The final output as a generated story based on the image and scene graph.

Source Code:

Github: Click here: bhaskatripathi/sceneGraphs

About the Author

Bhaskar Tripathi is a Ph.D. in Computational & Financial Mathematics. He is a leading open source contributor and creator of several popular open-source libraries on GitHub such as pdfGPT, text2diagram, sanitized gray wolf algorithm, tripathi-sharma low discrepancy sequence, TypeTruth AI Text Detector, HypothesisHub, Improved-CEEMDAN among many others.

Website: https://www.bhaskartripathi.com

Google Scholar: Click Here

Google Patents: Click here